Hey Everyone, as the project submission deadline nears I have been working to finish my project. The Heads Up Display(HUD) is rendering perfectly fine on the Oculus interacting with the leap motion and receiving data from the server. Till now, I was able to integrate the HUD with my own demo model in blender and simulate it on to the Oculus. The Final Step involved integrating the HUD with one of the model scenes available on the IMS V-ERAS repository.

This was a difficult task as the Italian Mars Society initially started to simulate models through the Blender Game Engine for the DK1 but as the support for Blender by Oculus was very limited they decided to shift to Unity Instead. Currently, they are working only on Unity and as I am working under PSF organization using unity was not an option. Also since there has been a lot of changed from Rift DK1 to Dk2, most of the models were unable to render successfully on the Oculus.

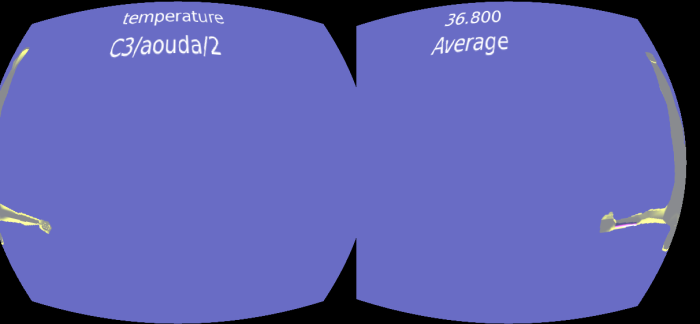

I had a little chat with my mentor about this issue, and he asked me to integrate the HUD with the models that were fully rendered on DK2 and forget about the other ones. After that, I found an avatar model and began work to integrate the HUD on to it.ouple of days later, I was able to render the HUD on to the Oculus with one of V-ERAS models through Blender Game Engine and the result seems good.

Couple of days later, I was able to render the HUD on to the Oculus with one of V-ERAS models through Blender Game Engine and the result seems good.

Currently, I am trying to make the HUD an addon in Blender Game Engine so that it can be imported into any model/scene and render successfully on the Oculus.

As for the final submission , I have started with the documentation and hopefully ,I will submit by the end of this week.

Final Post 😦